Surat River Fish Digitizer

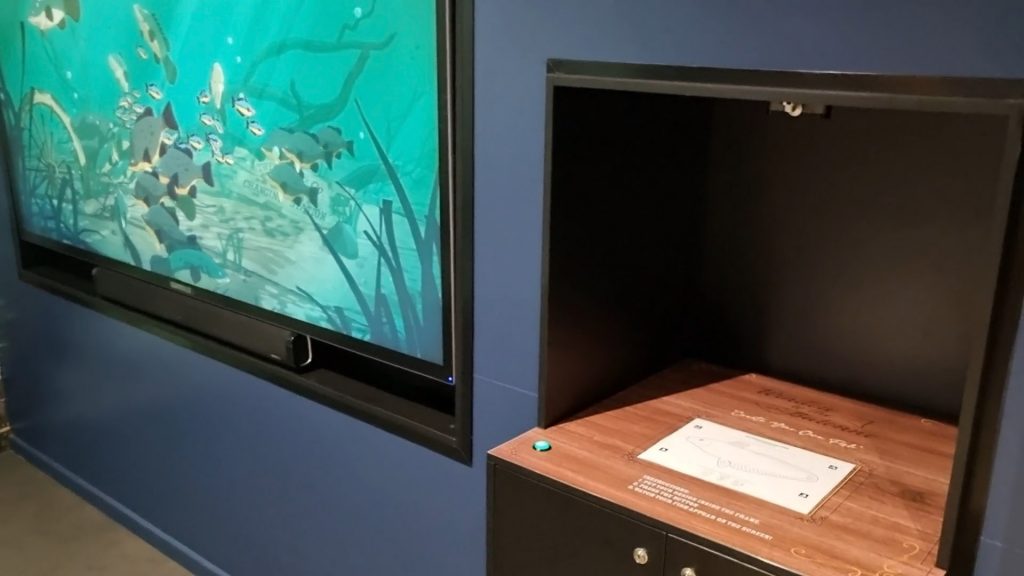

The Surat River Fish Digitizer is a project combining an interactive, real time, river fish simulation and a colouring station kiosk where users can physically colour in 1 of 4 fish on a piece of paper and then scan their creation to update the simulation with their fish in real time.

This project is on display at the Cobb & Co Changing Station in a small outback town called Surat located in Queensland, Australia.

Fish Sim

The fish artificial intelligence is directly based from my previous work in The Living Reef project.

A big difference between The Living Reef fish and the fish in this project is that this time I have used Unity’s inbuilt PhysX. As mentioned in The Living Reef project I opted to create my own Euler physics integration step for performance reasons as we were planning on having hundreds of fish and I wanted to use the JobSystem.

However, this river fish simulation did not require nearly as many fish as it was a more macro focused environment. But because we are closer to the fish and the fact there are less of them, then any clipping of the terrain would be more noticeable. Therefore using colliders with the physics engine was the better choice in this situation.

Physics

One of the issues when I added the rigidbody to the fish is that when they bumped into each other was that their collision response looked stiff and rigid and not bendy like a fish should do.

The solution to this was to somewhat emulate soft body physics. What I developed was a system where each bone in the fish has a rigidbody and were joined together using configurable joints. This way collisions have some give in them as the joints allow the fish to bend. This leads into the second part of this which is integrating animations.

Animation

The artist on this project, Ryan Bargiel, modelled and animated the fish as well as creating the shaders and environment.

While the fish were able to swim I needed to integrate his animations into the physics setup. What I did was to use the targetRotation on the configurable joint to try and match a rotation. This rotation is based on the animation. So for each fish I have an invisible gameObject that has an Animator and plays the animation, and then a physics fish with rigidbodies that then tries to match the pose. There is actually a final gameobject which then blends between both based on weights per bone. It’s difficult to get the physics to exactly match but with tweaking can get it close enough.

Image Recognition

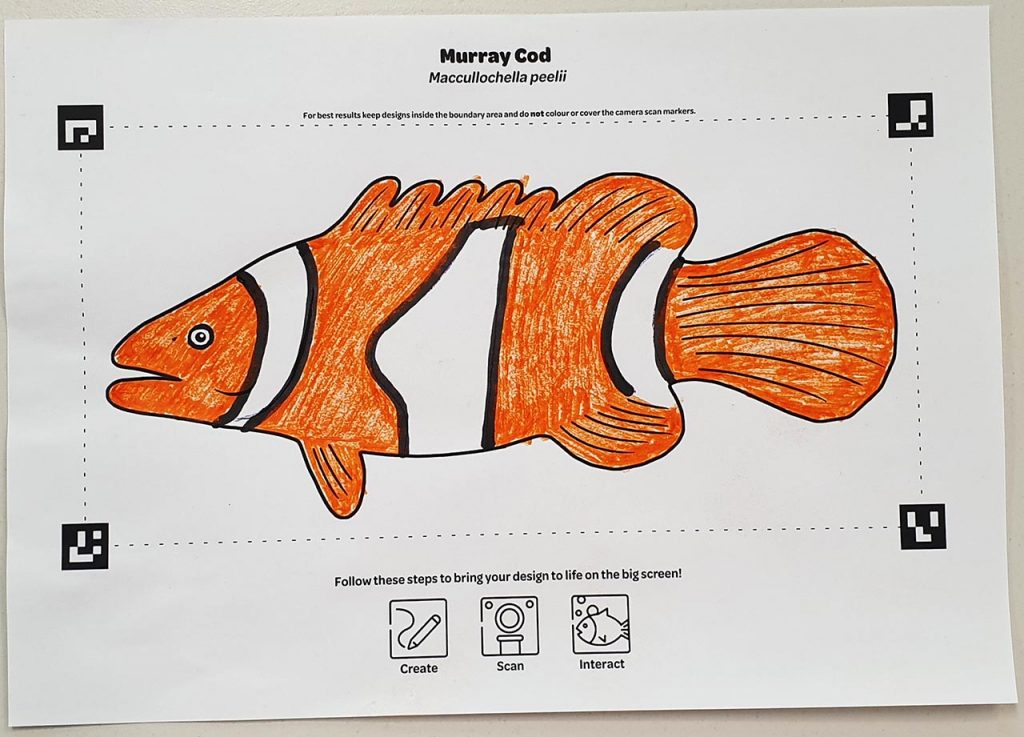

The other part of this project was that users could colour in the outlines of various fish, scan them in, and then see their artwork swimming around.

The tricky part with this is that the user places their creation on a surface and a webcam then takes a snapshot. There is no guarantee that they will place it exactly in the correct position or they may even place it upside down or on an angle. So the first job was to be able to capture a clean image that could then later be mapped.

I didn’t really know where to start to get this to work so I just googled to see what was out there. Vuforia looked like it supported the functionality that I was after but the licensing was expensive. In the end I decided to make my own tech.

Fortunately, there is an open source library for real time computer vision called OpenCV and I found an integration library for it with Unity.

One of the features of OpenCV was to be able to recognize ArUco markers. Another feature was the ability to re-orientate an image if it was skewed or on an angle.

Having just one ArCco marker was not enough to crop the image accurately. So I placed a marker in each corner of the paper. Then I used the centroid of each marker point to then crop out the texture image and filter it with OpenCV. I was impressed by how accurately it was able to crop the image.

To fix images that were scanned upside down, I devised a system where each corner had a unique number and each number range was unique to each fish. This way I could identify which type of fish was being scanned but could then use some logic to correct the corners if they were flipped.

Texture Mapping

Once a clean image was obtained then the next step was to update the texture on a fish that matched the one scanned. The shader itself was quite simple and simply renders the scanned image as a secondary texture in place of the main texture. Most of the work was done by Ryan who had to create the UV’s on the fish in a way that minimised seams while working with the scanned in image.

Of course an issue with mapping a 3D fish model from a 2D piece of paper is that the user can’t colour in the parts of the fish that are hidden behind such as the pectoral fins. To fix this Ryan created the UV’s to fill in this area by sampling another part of the fish that the user was able to colour in. This worked well.

Lights, Camera, Action!

The last little bit to this project was to create some feedback to the user to let them know if the fish was able to be scanned in correctly. The kiosk section where they scanned in their fish was kitted out with https://www.blinkstick.com/ which are programmable LED controllers. When a button was pushed the webcam would take a photo, try to process it. If it succeeded then I turned the lights green for a few seconds or if it failed, red.

If you are ever near Surat (population: 407) go check out the project at the Cobb and Co museum and give your artistic skills a workout.